Clinical Studies Show Advanced Hearing Aid Technology Reduces Listening Effort

By Veronika Littmann, PhD; Joel Beilin, MScEE; Matthias Froehlich, PhD; Eric Branda, AuD; and Patrick J. Schäfer, MS

Reused with permission from Hearing Review. All right reserved.

When we think of hearing aids that are optimal for a given patient, one of the first things that comes to mind is improved speech understanding, especially in background noise. However, one factor that does not receive the attention that it should is the amount of listening effort required by the patient to realize this optimal fitting in everyday use.

Even people with normal hearing experience situations that require increased listening effort. That is, we have to “work harder” to hear what we want to hear. Often this involves situations with excessive background noise, but it also occurs with soft speech, poor cell phone connections, trying to understand a speaker with a pronounced foreign accent, and many other difficult listening situations. When hearing loss is present, these challenges become even more pronounced.

Generally, listening effort relates to speech understanding; as effort increases, hearing-impaired individuals are forced to recruit additional cognitive resources to keep up. We know that effortful listening and cognitive load negatively impact simultaneous mental processes (eg, multi-tasking). The continued use of these additional cognitive resources also leads to listening fatigue, and often to the rejection of hearing aids.

Fatigue is usually thought of as tiredness or a lack of energy. It is commonly associated with feelings of diminished focus, lack of concentration, and mental deficiency. We know that even mild hearing loss causes increased listening effort, which in turn leads to increased listening fatigue. This can have significant consequences on patients’ energy levels, which influences how much they engage in speech communication, or in some cases, any activity that requires mental energy.

It is clear that increased listening effort can impact the benefit obtained from hearing aids in at least two ways. In the short-term, because the increased effort requires additional cognitive resources, simultaneous mental activity will be impacted (dual-tasking). If that task is word recall or mentally filling in the word of a sentence that was not understandable, speech recognition suffers. Alternately, the simultaneous task might involve reaction time or cognitive decision-making, in which case the patient needs to decide what task is most important (eg, when driving a car and simultaneously trying to follow a difficult-to-understand conversation). Over several hours, increased listening effort leads to listening fatigue, and also indirectly results in reduced speech understanding, as the patient will not have the mental energy to stay “tuned in.” Therefore, it’s critical to keep listening effort at a minimum for all listening situations throughout the day, to reduce the likelihood of fatigue.

We can reduce listening effort by making the listening task easier. For people with hearing impairment, it is reasonable to think that the fitting of appropriate hearing aids will reduce listening effort. However, it’s also important to point out that the most effective method of reducing listening effort is to not listen. This makes the task very easy, as there is no task. Hence, we need to optimize the hearing aid listening experience to prevent the latter from happening.

Research has shown that, as expected, the use of hearing aids does reduce listening effort for the hearing impaired.1 We would assume that hearing aid features that are known to improve speech understanding in background noise (eg, directional technology) would reduce listening effort even more, but research in this area has not been conclusive.2 This could be because of the specific technology that was studied, the design of the study, or the metric that was used to assess listening effort.

Methods to Assess Listening Effort

Several approaches have been used in research to assess listening effort. These include physiologic measures (eg, pupil dilation, heart rate, skin conductance, and salivary cortisol levels), recall and reaction time paradigms, and subjective assessment scales. While it may seem intuitive to simply ask the patient how difficult the listening experience might be, these scales are not always the most ideal measure. The patient might associate effort with something different, such as speech understanding, or the change in listening effort may be too subtle to observe. It’s important, therefore, that a test of listening effort is sensitive, reliable, and valid. It’s certainly likely that, when the most appropriate assessment of listening effort is used, it will be possible to identify hearing aid features and algorithms that do assist in reducing listening effort, and subsequently listening fatigue.

In the current study, we used an innovative objective method for measuring listening effort based on the electroencephalogram (EEG) recordings of the electrical events produced by the brain during a speech recognition task3; the EEG sample was extracted to precisely coincide with the given task. The angular entropy of the EEG phase was then examined. We would expect that smaller values of the angular entropy reflect a more “ordered” process of the phase distribution, which relates to reduced listening effort. That is, for a non-effortful listening environment, the phase is more uniformly distributed on the unit circle than for a demanding condition, where there is a specific focus. For meaningful interpretation of the EEG activity, mathematical calculations of phase vector were conducted (the Rayleigh Test), which then resulted in scaled values from 0.0 (no effort) to 1.0 (extreme effort).

Using this objective method of listening effort, we examined the effectiveness of two new features of the Signia primax Pure® 7px hearing aids. We presented a group of hearing-impaired participants with difficult speech-in-noise listening tasks, and recorded the EEG activity when a given primax feature was “On” vs “Off.” To establish the relationship between this objective measure and the participants’ behavioral perceptions, we also had the listeners rate listening effort on a 13-point scale.

Clinical Research Methods

The three features of the new Signia primax instruments that were studied were two aspects of SPEECH, and EchoShield. SPEECH is a comprehensive steering engine that orchestrates the different features and processes to minimize listening effort, regardless of the situation. It applies three principal strategies to improve the signal for the user: analyzing Signal Type, Direction, and Loudness.

When assessing “Signal Type,” SPEECH identifies which part of the signal is speech and which is noise, then activates features to attenuate the noise component. In the “Direction” assessment, the direction of the incoming sound is analyzed, and directivity features are adjusted to allow the target speech to stand out as clearly as possible. In the third component (“Loudness”), the level of the loudest speech signal is identified and maintained while softer sounds are attenuated to further enhance the contrast. Of these three aspects of SPEECH, “Direction” and “Loudness” were investigated in this clinical study.

The third feature of Signia primax that was studied was EchoShield. Reverberation of speech and background noise, which occurs in many listening situations, is known to degrade speech quality and reduce speech understanding. Conventional low-level input compression, which adds gain to these softer reflected sounds, can make reverberation effects even worse. The EchoShield algorithm operates using an analysis of the level difference between the direct sound and the reflected sounds of a given signal, ensuring that the softer reflections are not over-amplified.

For all features tested, listening effort was assessed with the feature “On” versus “Off.” For SPEECH “Direction,” the “Off” condition was realized by using the TruEar microphone mode, whereas “On” corresponded to Narrow Directionality. Furthermore, an additional intermediate setting—realized by conventional adaptive directional microphones—served as intermediate reference between TruEar and Narrow Directionality.

For evaluation of SPEECH, the Hearing In Noise Test (HINT)4 was used as the target speech material, with a background noise signal comprised of competing HINT sentences. The target speech signal was from at 0° azimuth, and the competing speech was from seven loudspeakers surrounding the listener. Similarly, for the EchoShield tests, the reverberant target speech was presented from 0° using the same speaker arrangement, while soft (~55 dB SPL) reverberant cafeteria noise was presented from the other directions. For the EchoShield tests, speech material was taken from the Connected Speech Test (CST).5

Each feature assessment was conducted at a signal-to-noise (SNR) ratio that was adjusted individually to a point where the listener could “just understand” the target speech with the particular feature switched off. At this SNR, the sentences in background noise were then presented with EEG activity collected for all conditions (feature “On” and “Off”, plus the intermediate setting for SPEECH “Direction”). At the conclusion of each test run, the participants also rated their subjective listening effort.

The EEG was recorded using a commercially available bio-signal amplifier. Eight active electrodes were placed according to the international 10-20 system, with Cz as reference and a ground electrode placed at the upper forehead. The data were bandpass-filtered from 0.5 to 40 Hz. A trigger signal indicated the onset and offset of each target speech stimuli, and therefore, the EEG data could be analyzed precisely during the presentation of the target speech. To determine if the instantaneous phase was uniformly distributed (random process; little or no effort) around the unit circle, or if the phase departed from uniformity and had a mean direction (greater listening effort), the Rayleigh Test was applied to the phase data, resulting in a scaled effort measure ranging from 0.0 to 1.0.6

The study was conducted at an independent research laboratory at the University of Northern Colorado. Study participants consisted of 12 adults with bilaterally symmetrical downward-sloping sensorineural hearing losses. They were all experienced hearing aid users. A subset of subjects participated in the tests of SPEECH, assessing the “Loudness” aspect.

Results

To examine the effectiveness of the new features of primax, the collected EEG findings were examined, comparing brain activity for the different conditions (eg, uniformity when the feature was activated to when it was not). For both SPEECH “Direction” and EchoShield, the objective brain behavior measures revealed a significant reduction in listening effort when the feature was activated (p<.05). SPEECH “Loudness” results did not reach statistical significance due to the lower number of subjects tested.

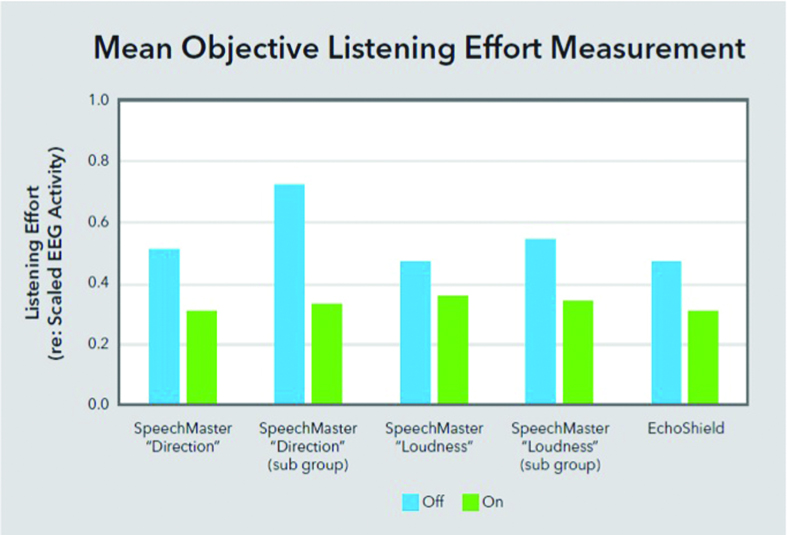

The findings for the objective listening effort results by mathematically scaled uniformity are shown in Figure 1 for all three features, illustrating the reduced effort when each feature was activated. For the SPEECH “Direction” findings, a bimodal distribution for the omnidirectional (TruEar) condition was observed. That is, 45% of the participants had an effort of >0.6 for the omnidirectional condition, whereas the other 55% only had an effort of around 0.3 for this same condition. The subgroup shown in Figure 1 are those participants who had the greatest effort rating for the control condition (observe the increased benefit for these individuals).

Figure 1. Average objective listening effort, based on scaled EEG activity, for the various tested features and subject groups for the feature “Off” (blue), and “On” (green).

Figure 1. Average objective listening effort, based on scaled EEG activity, for the various tested features and subject groups for the feature “Off” (blue), and “On” (green).During SPEECH “Loudness” testing, one subject was averse to any noise reduction feature, and explicitly stated so. Unsurprisingly, this subject preferred the setting with the least effect on the background signal in his subjective ratings (ie, SPEECH “Loudness” Off). Interestingly, SPEECH “Off” showed more localized brain activity in the objective measurements, resulting in lower values of the scaled uniformity. Results excluding this particular subject are shown in Figure 1 and labeled SPEECH “Loudness” (sub group).

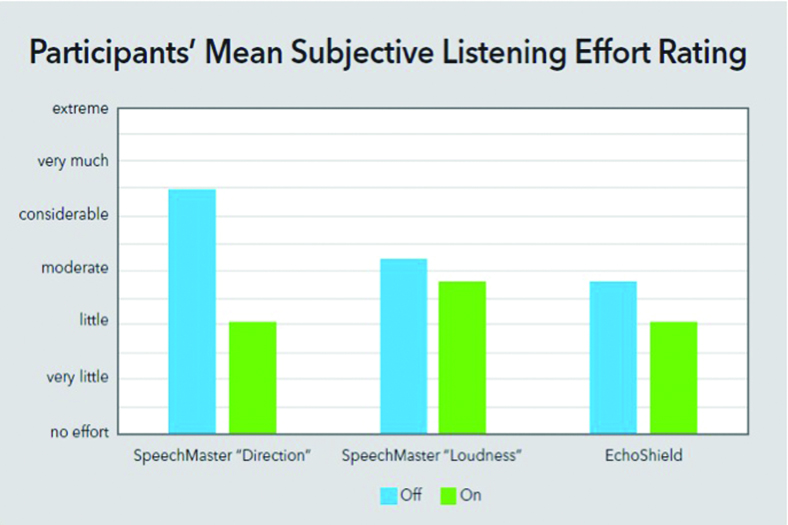

As a cross-check to these objective findings, we reviewed the participants’ behavioral ratings that were completed at the same time as the EEG recordings. These findings were in close agreement with the objective brain behavior data (Figure 2).

Figure 2. Average subjective listening effort ratings (13-point rating scale) for the three features investigated, in “Off” condition (blue), and “On” condition (green).

Figure 2. Average subjective listening effort ratings (13-point rating scale) for the three features investigated, in “Off” condition (blue), and “On” condition (green).The most notable findings of the behavioral testing were those for the listening task used for the SPEECH “Direction,” where the participants were surrounded by competing speech signals. Observe that the behavioral ratings went from above “considerable effort” to “little effort.”

Discussion

The results of this investigation show a clear pattern of reduced listening effort based on EEG activity when the new features of Signia primax are activated. Moreover, there is close agreement between the measures obtained from EEG activity and the participants’ subjective ratings. As we mentioned earlier, not all studies of listening effort have found the significant benefit for directional technology that we have shown here. It’s difficult to determine if this is because of study design, measurement procedures, or the directional technology used in these other studies. Regarding the latter, it is important to point out that the directional feature that we examined, SPEECH “Direction”, employs wireless binaural beamforming.6,7 This level of technology seldom has been used in studies of listening effort; however, we know that for speech recognition in background noise, we can expect significant improvement for this technology when compared to traditional adaptive directional processing.8,9

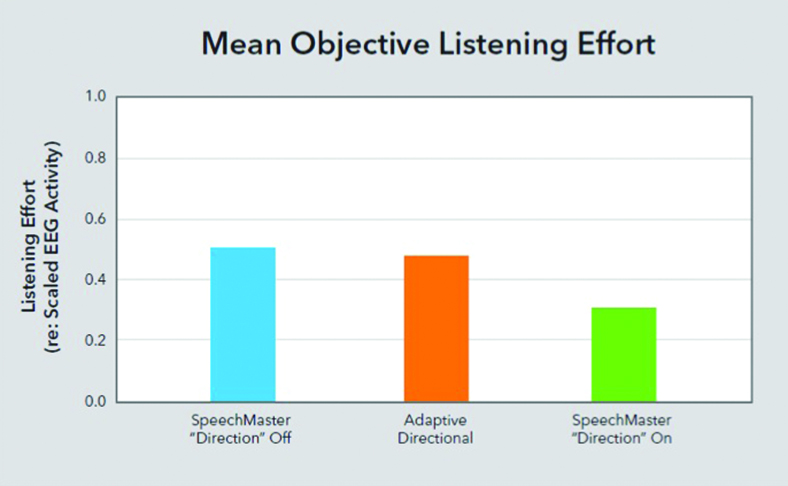

Recall that, as a reference, we collected EEG activity for listening effort for conventional adaptive directional at the same time that the TruEar and SPEECH data were studied. What is shown in Figure 3, are the mean scaled EEG data for all participants for the conventional adaptive directional processing, compared to the other two directional settings. Observe that, while SPEECH “Direction” shows a significant improvement in listening effort compared to the “Off” condition (p<.05), there is very little improvement in listening effort when the more common adaptive directional processing was used (p>.05). In other words, had we only used the directional technology available in most other hearing instruments, our conclusions would have been quite different. The data shown in Figure 3 clearly illustrate that the level of technology can impact the benefit in listening effort that is obtained from a given product.

Figure 3. Mean objective listening effort (scaled EEG findings) for three directional settings: SPEECH “Direction” Off (TruEar), Adaptive Directional, and SPEECH “Direction” On.

Figure 3. Mean objective listening effort (scaled EEG findings) for three directional settings: SPEECH “Direction” Off (TruEar), Adaptive Directional, and SPEECH “Direction” On.Summary

While it certainly is important to focus research and development on hearing aid technology that improves speech understanding, we must also consider the listening effort required. We know that effortful listening can increase cognitive load, which will negatively impact simultaneous mental processes, such as multi-tasking. In turn, this can lead to listening fatigue associated with tiredness or a lack of energy, lack of concentration, and mental deficiency. Listening fatigue has also been associated with an increased risk of falling among older adults.10 Clearly, reducing listening effort is an important part of the overall hearing aid fitting process.

We have shown that the new features of Signia primax significantly reduce listening effort and that this reduction is present for different listening conditions. Importantly, this was documented using an objective assessment of brain activity. When listening effort is reduced, patients are better equipped to engage in other mental activities, including focusing on speech communication. The expected outcome is improved benefit and satisfaction with hearing aids.

Acknowledgements

We would like to acknowledge the research inspiration and technical support of Key Numerics, in particular Daniel Strauss and Farah Corona-Strauss.

References

-

Picou EM, Ricketts TA, Hornsby BW. How hearing aids, background noise, and visual cues influence objective listening effort. Ear Hear. 2013; Sept:34(5):e52-64.

-

Hornsby BW The effects of hearing aid use on listening effort and mental fatigue associated with sustained speech processing demands. Ear Hear. 2013;34(5)[Sept]:523-34.

-

Schafer PJ, Serman M, Arnold M, Corona-Strauss FI, Strauss DJ, Seidler-Fallbohmer B, Seidler H. Evaluation of an objective listening effort measure in a selective, multi-speaker listening task using different hearing aid settings.Conf Proc IEEE Eng Med Biol Soc. 2015; Aug:4647-50

-

Nilsson M, Soli SD, Sullivan JA. Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95(2):1085-1099.

-

Cox R, Alexander G, Gilmore C. Development of the connected speech test (CST). Ear Hear. 1987;8(5s):119s-126s.

-

Kamkar-Parsi H, Fischer E, Aubreville M. New binaural strategies for enhanced hearing. Hearing Review. 2014;21(10):42-45.

-

Herbig R, Froehlich M. Binaural beamforming: The natural evolution. Hearing Review. 2015; 22(5):24.

-

Powers TA, Froehlich M. Clinical results with a new wireless binaural directional hearing system. Hearing Review. 2014;21(11):32-34.

-

Froehlich M, Freels K, Powers TA. Speech recognition benefit obtained from binaural beamforming hearing aids: comparison to omnidirectional and individuals with normal hearing. May 2015. AudiologyOnline article 14338. Available at: http://www.audiologyonline.com

-

Lin FR, Ferrucci L. Hearing loss and falls among older adults in the United States. Arch Intern Med. 2012;172(4):369-371. doi:10.1001/archinternmed.2011.728.