Power the conversation with Signia Integrated Xperience and RealTime Conversation Enhancement

Niels Søgaard Jensen, Barinder Samra, Homayoun Kamkar Parsi, Sascha Bilert, Brian Taylor

Abstract

Group conversations in background noise are among the most challenging situations for people with hearing loss, and many hearing aid wearers find it hard to participate and contribute when being in a group conversation, especially in noise. In this paper, we present RealTime Conversation Enhancement technology, which is part of the Signia Integrated Xperience platform, as a solution to the problems experienced in group conversions in noisy environments. Using a new multi-stream architecture, RealTime Conversation Enhancement analyzes the conversation situation, detects conversation partners and creates a live auditory space, which augments the speech and immediately adapts to changes in the conversation layout. Results from a study on the perceptual effects show that a significant improvement in speech understanding is offered by RealTime Conversation Enhancement. In a test simulating a group conversation scenario, 95% of the participants showed better performance with RealTime Conversation Enhancement.

Introduction

The perceived benefits of wearing hearing aids have improved substantially during the last decade. This is, for example, shown by MarkeTrak survey data (Picou, 2022) where the percentage of hearing aid owners who reported that hearing aids regularly improved their quality of life increased from 48% in 2015 to 64% in 2022. This significant increase can, to a large extent, be explained by the constant development and release of new technology and features in hearing aids and accompanying smartphone apps that gradually have improved the hearing aid performance and the wearer experience (Taylor & Jensen, 2023).

However, while some problems experienced frequently by hearing aid wearers one or two decades ago have been markedly reduced or even eliminated by new technology, there are still problems that affect wearers’ satisfaction and quality of life negatively and remain to be addressed. Picou (2022) lists MarkeTrak data on the percentage of hearing aid owners and non-owners (with hearing loss) who are satisfied with their ability to hear in different types of listening situations. Among non-owners, situations involving “following conversations in the presence of noise” and “conversations with large groups” are the ones showing the lowest share of satisfied respondents. Even though hearing aid owners show a substantial increase in the overall share of satisfied respondents (compared to non-owners), these specific situations are still among those with the lowest percentage of satisfied hearing aid owners. This finding will come as no surprise to hearing care professionals who regularly encounter issues related to group conversations in noise during the process of fitting hearing aids.

Nicoras et al. (2022) investigated what it takes to make a conversation successful and found the factor “being able to listen easily” to be the most important for conversation success for both participants with hearing loss and participants with normal hearing. Also, both groups found it to be more important in a group conversation than in a one-on-one conversation. It is obvious and unsurprising that the ability to hear and understand is key to a successful group conversation. However, the research also showed that other factors are important, e.g., “sharing information as desired” – which is about information exchange and achieving outcomes. Thus, conversation success is not only about the ability to hear and understand what is being said, but also about the ability to contribute actively to the conversation.

A conversation is also a dynamic process, involving two or more interlocutors (conversation partners) who take turns speaking. The way hearing loss and use of hearing aids (by one of the interlocutors) affect conversation dynamics has gained an increased level of interest from researchers over the past few years. In a study on one-to-one conversations between participants with hearing loss (HL) and participants with normal hearing (NH), Petersen et al. (2022a) found that the timing of turn-starts exhibited more variability for the unaided HL participants than for the NH participants. However, when hearing aids were worn, the variability was reduced and approached that observed for the NH participants. In another study on group conversations involving three interlocutors (two NH and one HL), Petersen et al. (2022b) found that hearing aid amplification not only reduced the speech level of the hearing aid wearer but also the speech level of the NH interlocutors. Thus, hearing loss and hearing aids not only affect the individual wearer’s ability to hear and understand what is being said in a conversation. They affect the dynamics of the entire conversation, e.g., turn-taking and speech levels.

Hearing aid wearers who experience problems in group conversations have some options to improve on the problems with the hearing aids they already have, e.g., by carefully choosing the environment, in which the conversation takes place, and by picking the right position in the room, e.g., when dining out (Eberts, 2020). However, conversations cannot always be planned or controlled. It is therefore an important task – and a key challenge – for hearing aids to provide appropriate support when the wearer wants to actively participate in a conversation.

The hearing aid processing approaches traditionally applied to improve speech-in-noise performance often fall short in group conversations. Even though use of a directional microphone with a narrow beam may provide a benefit when the wearer listens to a person just in front of them, it will cut out simultaneous talkers from other directions, and it will typically not allow the wearer to turn their head to look in other directions (away from the active talker). Alternatively, a broader directionality (i.e., a wider beam) can be applied to capture all talkers in the group conversation. However, this will typically require the use of more aggressive noise reduction algorithms to remove disturbing surrounding noise. This is not always effective in improving speech perception, and such algorithms may add a considerable amount of distortion to the sound and create an overall poor listening experience when speech and noise is processed together (single-stream processing).

Signia is a long-time leader in the development of solutions that address the speech-in-noise problem experienced by hearing aid wearers. Considerable progress has been made through the release of new products and features that highlight speech and suppress noise via the use of advanced signal processing schemes, such as binaural processing, directionality, and noise reduction. In 2021, the launch of the Signia Augmented Xperience (AX) platform provided yet another leap forward. By introducing Augmented Focus with its split processing approach (Best et al., 2021), new speech understanding benefits were offered to the wearer in challenging communication situations (Jensen et al., 2021; Taylor & Jensen, 2022).

One unique characteristic of Augmented Focus is that it uses advanced directional technology to split incoming sounds into two streams, which are processed separately. The front stream is primarily for speech (the focus stream, which the wearer wants to attend), while the back stream primarily is for background noise (the surrounding stream, which is available, comfortable, and not distracting to the wearer). This approach already addresses some of the aforementioned issues with traditional processing. However, while this approach has been shown to offer significant benefits in a wide range of situations, it has one limitation: the focus stream is processed without knowing the exact communication situation of the wearer. How many people are involved in the conversation? Where are they located? And what are the dynamics of the conversation? When these characteristics are not known, they can obviously not be accounted for by the processing of the focus stream. Thus, while Augmented Focus is able to broadly enhance speech with minimal distortion, it is not able to add contrast to and enhance individual talkers. But that has changed now!

With the introduction of RealTime Conversation Enhancement, the new Signia Integrated Xperience hearing aids completely change the way conversations are processed. Based on a multi-stream architecture, it provides a fundamentally new and different way of analyzing a conversational setup and processing speech, which offers a new level of support when the wearer engages in dynamic group conversations.

In this white paper, we will present and discuss data from a study showing how wearers benefit from Signia Integrated Xperience (IX) with RealTime Conversation Enhancement (RTCE) in group conversations. However, first we will explain the basic functionality of this new and groundbreaking technology.

How it works

The new RTCE technology is developed on top of the Augmented Focus processing (split processing), which was introduced in Signia AX. Split processing enables separate processing of speech and noise by splitting the incoming sound into two different streams (i.e., separately processed sound from the front and the back, respectively).

In Signia IX, controlled by advanced sound analysis, RTCE adds three separate focus streams to the split-processing focus stream in the front hemisphere. Consequently, RTCE provides unprecedented improvements in situations where the wearer engages in conversations with one or multiple talkers in background noise.

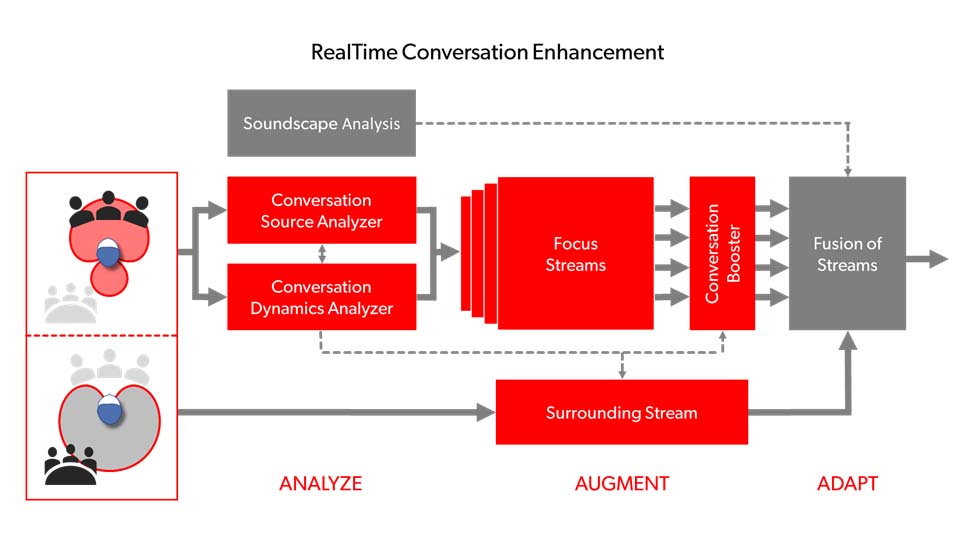

The basic principles of RTCE are shown in Figure 1. As indicated in the figure, the RTCE processing follows a three-stage approach: Analyze, Augment, and Adapt. Note that the stages and their elements represent a simplified description of the processing. In reality, the split processing (shown to the left) and the three RTCE stages occur simultaneously in a highly complex, integrated process. However, the three stages and their elements, as outlined in Figure 1, provide a basic overview of how RTCE works. Next, with reference to the figure, we describe the role of each stage.

Figure 1. Simplified diagram showing the functionality of the RealTime Conversation Enhancement technology. Bold lines and arrows indicate the flow of the streams, while thin dotted lines and arrows indicate control paths.

Analyze. In the first stage, the incoming sound is analyzed by the Conversation Source Analyzer. This stage determines whether speech is present in the front hemisphere and uses a proximity detector to assess the relevance of the speech. The detector can distinguish relevant speech produced by conversation partners close to the wearer from irrelevant speech such as voices in the background (e.g., babble noise in a restaurant) or distinct talkers that are far away from the relevant conversation partners. When relevant speech is detected, the location of each talker is determined. This is done by applying a high number of advanced filters to the binaural microphone signals. Utilizing spatial sound cues in the microphone signals, the filters are designed to be responsive at different angles in the front hemisphere. Together, the filters span a fine (high-resolution) grid, which enables the directions to active talkers to be determined by the most responsive filters.

In parallel with – and using input from – the Conversation Source Analyzer, the Conversation Dynamics Analyzer determines the entire conversation layout. Besides the locations of relevant conversation partners, this includes analyzing the turn-taking dynamics in the conversation and estimating locations and levels of non-speech sound sources. Altogether, the analysis involves the processing of 192,000 data points per second. The processing power at such high speed enables real-time analysis of dynamic conversations where talkers move around and change positions. Equally important, it allows the wearer to turn their head as they want without the Conversation Dynamics Analyzer losing track of the conversation layout.

Augment. Three independent and highly dynamic focus streams are created using advanced binaural beamforming and added to the split-processing front-hemisphere focus stream. The four Focus Streams are integrated in the Conversation Booster, which ensures the optimal enhancement of speech within each focus stream and a perfect balance between the streams. Together, the four focus streams cover the entire front hemisphere and allow separate processing of speech coming from different directions, such as spatially separated conversation partners.

The information about the conversation layout allows suppression of noise from non-speech directions, including noise sources in the front hemisphere. The speech processing in the focus streams can be adjusted according to the dynamics of the conversation and thereby create a clear contrast to the background noise. In parallel with the focus stream processing, the Surrounding Stream – which includes the background sound the wearer may not want to focus on, but still wants to be able to hear – is processed independently. This allows for a completely different type of processing of the surrounding background noise. For example, by providing more aggressive noise reduction to the surrounding stream while taking the entire conversation layout into account, the wearer will feel immersed in the context of the conversation, without the surrounding sound masking the target speech.

Adapt. The fundamental aim of the Analyze and Augment stages of RTCE is to enable a smooth adaptation to any type of conversation the wearer wants to engage in. The full adaptation effect is obtained in the fusion of the focus streams and the surrounding stream. Together, the streams create a live auditory space where the conversation partners stand out against the background noise, as depicted visually in Figure 2. The focus streams will adapt to any changes in the conversation layout, e.g., when talkers enter or leave the conversation, when they move around or when the wearer turns their head. Thus, the active talkers in the conversation are continuously being tracked and augmented through the focus streams.

Figure 2. Simplified visual illustration of the live auditory space created by RealTime Conversation Enhancement. Morphing the independent focus streams results in enhancement of the individual talkers in a group conversation, and the live auditory space will adapt immediately to changes in the conversation layout.

The focus streams and the surrounding stream are mixed intelligently, partly based on input from the motion and acoustic sensors in the Soundscape Analysis and thereby taking the entire listening situation into account. As the focus streams are constantly adapting their position, width, and level of enhancement, refreshing 1,000 times a second, they will adapt immediately to any changes in the conversation layout and thereby provide the optimal processing at all times.

The combined results of the three stages of the RTCE processing is a listening experience that effectively adapts to both the conversation layout and the entire acoustic environment. This allows the wearer to be fully part of and contribute to the conversation while still being immersed in the surroundings.

Putting RealTime Conversation Enhancement to the test

The audiological performance of RealTime Conversation Enhancement was tested in a lab study conducted at Hörzentrum Oldenburg, Germany. The focus of the study was to investigate how RTCE affects speech understanding in noise, with wearers participating in a group conversation situation.

Methods

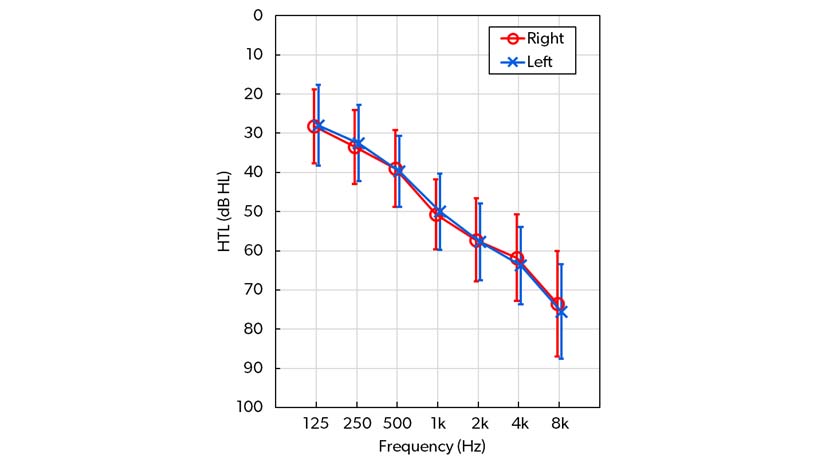

Twenty participants (10 female, 10 male, age range: 55-82 years, mean age: 72 years) with sensorineural sloping hearing loss participated in the study. The mean audiogram of the participants is shown in Figure 3. They were all experienced hearing aid wearers.

Figure 3. Mean audiogram for left and right ears of the 20 participants. Error bars indicate ± one standard deviation.

The participants were fitted with Signia Pure Charge&Go T IX receiver-in-the-canal (RIC) hearing aids, according to the IX First Fit rationale. Half of the participants (n=10) were fitted with closed couplings (power sleeves), and the other half were fitted with vented couplings (vented sleeves). The hearing aids were fitted with two programs. In the first program, all IX features, including RTCE, were activated. In the second program, RTCE with its multi-stream architecture was disabled. All other features, including split processing, were activated.

Two speech tests were conducted. In the first test, a basic speech-understanding baseline was measured using a standard implementation of the German Matrix test, the Oldenburger Satztest (OLSA; Wagener et al., 1999). The test was performed in a listening room where target sentences were presented from a loudspeaker in front of the participant (at 0°), while unmodulated, speech-shaped masking noise was presented from a loudspeaker behind the participant (at 180°). Both loudspeakers were positioned 1.3 m from the participant. The task of the participant was to repeat the target sentences, and the experimenter then scored the number of correctly repeated words. The noise was presented at a fixed level of 65 dBA, while the level of the speech was varied adaptively from sentence to sentence depending on the number of words repeated correctly. The adaptive procedure included presentation of 20 sentences, and it allowed determination of the signal-to-noise ratio (SNR) at which 80% of the words could be repeated correctly. This speech reception threshold will be referred to as SRT80.

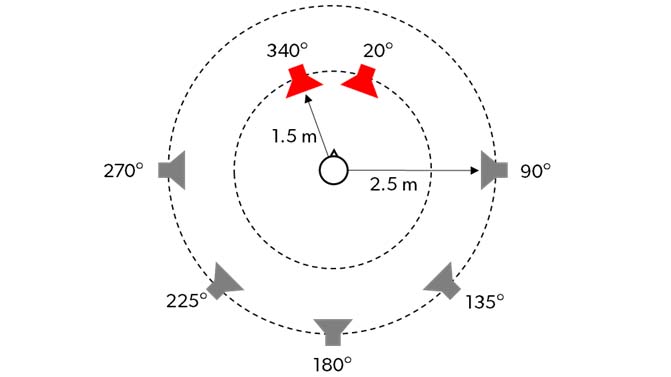

The second test was also based on the standard OLSA test, but to assess speech-in-noise performance in a scenario simulating a realistic group conversation, some modifications in the test setup were introduced. First, the test was done in a larger and more reverberant room (a meeting room). Second, to simulate the presence of multiple talkers, the target sentences were presented alternately from two loudspeakers positioned 1.5 from the participant at 20° and 340° azimuth. The participants were allowed to act naturally by turning their head if desired, but they were not required to do so. Third, the masking noise was babble noise created by playing the target sentence material continuously from five loudspeakers positioned 2.5 m from participant at 90°, 135°, 180°, 225° and 270° azimuth, that is, from directions behind and to the side of the participant with different sentences being presented from the different loudspeakers. The loudspeaker setup is shown in Figure 4. The total level of the babble noise was fixed at 67.5 dBA. The task of the participant was the same as in the standard OLSA test, and the adaptive procedure was also the same, however, with 30 sentences being presented in the modified test. Thus, the outcome of the test was SRT80.

Figure 4. Loudspeaker setup used in the modified OLSA test, simulating a group conversation scenario. Target sentences were presented alternately from the two red loudspeakers, while masking sentences (babble noise) were presented from the gray loudspeakers.

Each participant performed each test with both hearing aid settings: RTCE ON and RTCE OFF. The order of test conditions was counterbalanced across participants to reduce order effects. The participants were always blinded to the identity of the program under test. In both tests, prior to the actual testing, a training round was completed to familiarize the participants with the task.

Results

Standard OLSA

The results of the standard OLSA test are shown in Figure 5. The left plot in Figure 5 shows the mean SRT80 values across the 20 participants while the right plot shows the individual SNR benefits of RTCE observed in the test, i.e., the individual differences between SRT80 obtained with RTCE OFF and ON respectively. Please note that a lower SRT80 means better performance. That is, a lower (better) score on the SRT80 equates with greater wearer benefit.

Figure 5. Left plot: Mean SRT80 for RTCE OFF and ON, across 20 participants in the standard OLSA test. Error bars indicate one standard deviation. Right plot: The individual SRT80 differences between RTCE OFF and ON. Red circles show positive differences, indicating an SNR benefit provided by RTCE.

Figure 5 shows the mean SRT80 with RTCE turned off and on was -7.2 dB and -8.8 dB, respectively. Thus, RTCE provided a mean SNR benefit of 1.6 dB. On an individual level, the SNR benefit was observed for 18 out of the 20 participants (90%).

The data were analyzed with repeated measures ANOVA with SRT80 as the dependent variable, with HA setting (RTCE OFF/ON) as an independent variable, and with type of coupling as a categorical factor. The analysis showed a significant effect of HA setting (F(1, 18) = 27.76, p < .0001) and a significant effect of coupling (F(1, 18) = 5.38, p < .05), but no significant interaction between HA setting and coupling (F(1, 18) = .0003, n.s.). Thus, even though there was a difference between the two subgroups (where the group with closed couplings performed better than the group with vented couplings), the SNR benefit provided by RTCE was the same for both groups, and a Tukey HSD post-hoc test confirmed that the RTCE benefit was significant in both subgroups (p < .01). In summary, the analysis showed a clear and statistically significant benefit of RTCE in the standard OLSA test.

Modified OLSA

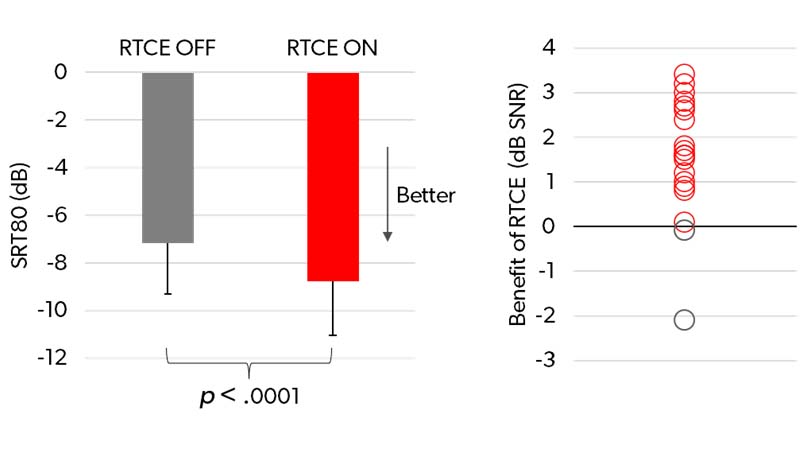

Figure 6 shows the SNR benefits provided by RTCE, which were observed in the modified OLSA test where a group conversion scenario was simulated. Again, the left plot shows the mean SRT80 for RTCE OFF and ON, respectively, and the right plot shows the individual benefits.

Figure 6. Left plot: Mean SRT80 for RTCE OFF and ON, across 20 participants in the modified OLSA test simulating a group conversation. Error bars indicate one standard deviation. Right plot: The individual SRT80 differences between RTCE OFF and ON. Red circles show positive differences, indicating an SNR benefit provided by RTCE.

The left plot in Figure 6 shows the mean SRT80 for RTCE turned off and on was -2.5 dB and -3.6 dB respectively. Thus, in this more complex test scenario, RTCE provided a clear mean benefit of 1.1 dB. Worth noticing in the right plot, on an individual level, 19 of the 20 participants (95%) performed better in the test with RTCE.

The data were analyzed in the same way as the data from the standard OLSA test. A repeated measures ANOVA showed a highly significant effect of HA setting (F(1, 18) = 43.16, p < .00001), but no significant effect of coupling (F(1, 18) = 0.051, n.s.) and no significant interaction between HA setting and coupling (F(1, 18) = .060, n.s.). Thus, in this test, the two subgroups performed at the same level, and they experienced the same SNR benefit of RTCE. A Tukey HSD post-hoc test showed that the RTCE was statistically significant for both subgroups (p < .01). In summary, the results from the modified OLSA test also showed a clear and highly significant benefit of RTCE in the simulated group conversation.

Discussion

The results in this study pointed in the same and unambiguous direction: In both speech tests, adding RTCE with its multi-stream architecture to the existing split processing offered a significant improvement in the participants’ ability to understand speech in the challenging conditions.

While the standard OLSA test is a fairly simple test, it offers a good simulation of a typical one-on-one conversation where the hearing aid wearer is facing a conversation partner with noise coming from behind. In the test, a mean SRT80 improvement of 1.6 dB was observed. Based on the value of the slope of the underlying psychometric function of the standard OLSA test provided by Wagener & Brand (2005), an improvement of 1.6 dB corresponds to around 25% better speech understanding. This observed benefit indicates how RTCE can have a substantial impact also in ‘simple’ conversation setups where the ability to adapt to the conversation layout allows more focus on the conversation partner in front. While the same effect in principle could be obtained with a narrow beamformer, the narrow beamformer would not allow the wearer to turn their head during the conversation or allow a third person to enter the conversation (without missing out). Due to its continuous analysis and adaptation to the conversation layout, RTCE allows such actions, which contribute to a more natural listening experience where the wearer can feel fully immersed in the context of the conversation.

In the modified OLSA test, the test scenario was more complex and much closer to a group conversation scenario with speech coming from different directions in front of the participant and with fluctuating babble noise coming from different directions behind the participant. The increased complexity was directly reflected in mean SRT80 values being around 5 dB higher compared to the values observed in the standard test. Having more than one target direction and using spatially distributed speech babble as masking noise (in a more reverberant room) simply makes the test more difficult than the standard test at a given SNR.

The most important and noteworthy result in the modified OLSA test is that RTCE also provides a substantial benefit in group conversations. It was a striking observation that 95% of the participants performed better in the test with RTCE turned on. The observed highly significant mean SNR benefit of 1.1 dB corresponds to an improvement in speech understanding of close to 20% (using the same value of the slope of the psychometric function as used for the standard OLSA test; Wagener & Brand, 2005). This will offer the wearer a clear benefit in a group conversation, compared to not having access to RTCE.

In combination, the results from the two tests indicate that RTCE provides a substantial benefit in different types of conversation layouts, including both one-on-one conversations and more complex conversation scenarios with multiple conversation partners. When the study results are coupled with RTCE’s ability to track and adapt to changes in the conversation layout, it is evident that RTCE offers completely new possibilities for wearers who want to fully engage in a conversation.

The improvement in speech understanding offered by RTCE not only allows the wearer to understand more when being part of a conversation, it also enables the wearer to contribute more actively – and more confidently – to the conversation. The less the wearer must struggle to understand their conversation partners, the more cognitive resources can be saved. These resources instead can be spent on the task of responding to what is being said, and the wearer thereby is empowered to contribute more to the conversation.

From this perspective, it is important that all voices, both those of conversation partners and the wearer’ own voice, are processed optimally. This makes Signia IX’s Own Voice Processing (OVP) 2.0 another critical element in the hearing care professional’s toolbox. Optimizing the sound of the wearer’s own voice can positively impact the wearer’s contribution to conversations (Powers et al., 2018). The ability of OVP 2.0 to improve the perception of own voice (Jensen et al., 2022) – also in situations with background noise – is therefore a vital element of the wearer’s conversation experience. It is worth mentioning that in cases where wearers use Signia Assistant to report perceptual problems in a given listening situation, both RTCE and OVP 2.0 performance can be adjusted to improve the listening experience. Given these fine-tuning capabilities, Signia Assistant is a powerful tool for wearers looking for improvements in conversational situations.

Summary

In this paper, we have described the new RealTime Conversation Enhancement technology with its multi-stream architecture, which is introduced on the Signia Integrated Xperience platform. The aim of the technology is to enable wearers to increase their engagement in conversations, especially in dynamic group conversations where background noise is present.

By applying a highly advanced sound analysis, RTCE can detect the locations of relevant conversation partners. The multi-stream architecture creates an auditory space where the conversation partners are augmented, making it easier for the wearer to participate in and contribute to the conversation. By updating the system 1,000 times a second, it adapts to any changes in the conversation layout, e.g., when talkers move or when the wearer turns their head.

The perceptual benefits of RTCE were investigated in a study. The main conclusions were:

- In a simple speech test simulating a one-on-one conversation with the conversation partner situated in front of the participant, activating RTCE provided a significant improvement in speech understanding with 90% of the participants showing better performance with RTCE.

- In a more complex speech test, simulating a group conversation scenario, with conversation partners speaking alternately from directions to the left and right of the participant, activating RTCE also provided a significant improvement in speech understanding with 95% of the participants showing better performance with RTCE.

- In combination, the results from the two tests suggest that RTCE can significantly improve the wearer’s conversational experience, making it easier to understand and contribute to the conversation.

References

Best S., Serman M., Taylor B. & Høydal E.H. 2021. Augmented Focus. Signia Backgrounder. Retrieved from www.signia-library.com.

Eberts S. 2020. Dining Out for People with Hearing Loss. The Hearing Journal, 73(1), 16.

Jensen N.S., Høydal E.H., Branda E. & Weber J. 2021. Augmenting speech recognition with a new split-processing paradigm. Hearing Review, 28(6), 24-27.

Jensen N.S., Pischel C. & Taylor B. 2022. Upgrading the performance of Signia AX with Auto EchoShield and Own Voice Processing 2.0. Signia White Paper. Retrieved from www.signia-library.com.

Nicoras R., Gotowiec S., Hadley L.V., Smeds K. & Naylor G. 2022. Conversation success in one-to-one and group conversation: a group concept mapping study of adults with normal and impaired hearing. International Journal of Audiology, 1-9.

Petersen E.B., MacDonald E.N. & Sørensen A.J.M. 2022a. The Effects of Hearing-Aid Amplification and Noise on Conversational Dynamics Between Normal-Hearing and Hearing-Impaired Talkers. Trends in Hearing, 26, 1-17.

Petersen E.B., Walravens E. & Pedersen A. 2022b. Real-life Listening in the Lab: Does Wearing Hearing Aids Affect the Dynamics of a Group Conversation? Proceedings of the 26th Workshop on the Semantics and Pragmatics of Dialogue, August 22-24, 2022, Dublin.

Picou E.M. 2022. Hearing aid benefit and satisfaction results from the MarkeTrak 2022 survey: Importance of features and hearing care professionals. Seminars in Hearing, 43(4), 301-316.

Powers T.A., Davis B., Apel D. & Amlani A.M. 2018. Own Voice Processing Has People Talking More. Hearing Review, 25(7), 42-45.

Taylor B. & Jensen N.S. 2022. Evidence Supports the Advantages of Signia AX’s Split Processing. Signia White Paper. Retrieved from www.signia-library.com.

Taylor B. & Jensen N.S. 2023. Unlocking Quality of Life Benefits Through Firmware and Apps. Hearing Review, 30(5), 24-26.

Wagener K., Brand T. & Kollmeier B. 1999. Entwicklung und Evaluation eines Satztests für die deutsche Sprache. I-III: Design, Optimierung und Evaluation des Oldenburger Satztests (Development and evaluation of a sentence test for the German language. I-III: Design, optimization and evaluation of the Oldenburg sentence test). Zeitschrift für Audiologie (Audiological Acoustics), 38, 4-15.

Wagener K.C. & Brand T. 2005. Sentence intelligibility in noise for listeners with normal hearing and hearing impairment: influence of measurement procedure and masking parameters. International Journal of Audiology, 44(3), 144-156.