Speech Recognition Benefit Obtained from Binaural Beamforming Hearing Aids: Comparison to Omnidirectional and Individuals with Normal Hearing

By Matthias Froehlich, PhD, Katja Freels, Dipl.Ing., and Thomas A. Powers, PhD

Learning Objectives

As a result of this Continuing Education Activity, participants will be able to:

- Explain the benefit Narrow Directionality can bring for those with mild to moderate hearing loss, compared with those who have normal hearing.

- Describe the basic study set-up used in the studies.

- Describe the subject characteristics shown in these studies to result in the most benefit from Narrow Directionality.

Abstract

Objective: Clinical evaluation of the efficacy of a new hearing aid binaural beamforming algorithm; specifically, comparing the aided speech recognition of individuals with hearing loss to that of omnidirectional processing, and a second group of participants with normal hearing.

Design: Speech recognition in noise was assessed at two different sites using the Oldenburger Satztest (Site 1) and the Hearing In Noise Test (Site 2). The target sentence was presented from 0°, and the competing speech material (uncorrelated sentences of the same speech test) was presented from seven loudspeakers surrounding the participant.

Study Sample: The hearing impaired individuals all had bilaterally symmetrical mild-to-moderate downward sloping sensory/neural hearing loss (Site 1: n=29, mean age 68.8; Site 2: n=14, mean age 65.8). A second group of participants were individuals with normal hearing (Site 1: n=15, mean age 64.5; Site 2: n=14; mean age 58.1).

Results: Statistical analysis revealed a significant advantage (average SNR improvement of 5.0 dB) for the beamforming algorithm when compared to omnidirectional processing. When the beamforming algorithm was used by the hearing impaired participants, there also was a significant difference between groups at both sites, with better speech recognition performance for the hearing impaired groups fitted with the binaural beamforming hearing aids. Cohen’s d revealed a large effect size for this latter finding for both sites.

Background

Improving speech understanding in background noise has been a long- standing request from hearing aid users when obtaining hearing aids, and likewise, a decades-old design criterion for hearing aid manufacturers. Historically, directional technology has been the most successful wearable solution. Directional microphone hearing aids, using a single directional microphone, were introduced (in the U.S.) in 1971. Early research revealed that this technology indeed provided improved speech understanding in background noise, in at least the somewhat-contrived clinical test protocols (see Mueller, 1981 for review).

The use and availability of directional microphone products waned in the 1980s and early 1990s due to the popularity of custom instruments, particularly the completely-in-canal (CIC) style, which did not have the space available for the required two port inlets. A directional hearing aid resurgence, however, occurred in the mid-1990s, when dual-microphone directional processing was introduced and promoted (Valente, Fabry, & Potts, 1995; Ricketts & Mueller, 1999; Ricketts & Dhar, 1999).

The use of the dual-microphone scheme has allowed for other innovative processing which provides patient benefit for selected listening situations. The switching between omnidirectional and directional can now be linked to the signal classification system and occur automatically. Additionally, frequency-specific adaptive polar patterns can lock on a specific noise source, or track moving interfering noise sources, allowing for less amplification of the noise from these sources and improved speech recognition (Ricketts, Hornsby & Johnson, 2005). This adaptive technology also can be extended to create an anti-cardioid pattern, which has been shown to improve speech understanding in background noise when the desired speech signal is from behind the user (Mueller, Weber, & Bellanova, 2011, Kuk & Keenan, 2012, Wu, Stangl, Bentler, & Stanziola, 2013). The most recent development with directional hearing aids involves wireless communication, which allows for the exchange of audio data received by the microphones of both the right and left hearing aids and can be used to achieve narrow beamforming. Limited research with this technology has been encouraging (Kreikemeier, Margolf-Hackl, Raether, Fichtl, & Kiessling 2013; Picou, Aspell, & Ricketts, 2014).

This paper reports on the clinical trials for a hearing aid with a newly designed binaural beamforming algorithm using wireless audio data transfer, similar to that used in previous research.1 This technology was described in detail by Kamkar-Parsi et al. (2014), and is briefly reviewed here. The beamforming algorithm takes as inputs the local signal, which is the unilateral directional signal, and the contralateral signal. The contralateral signal refers to the unilateral directional signal transmitted from the hearing aid located on the other side of the head, via the bilateral wireless link. The unilateral directional signals (from the local side and the contralateral side) are already enhanced signals from the respective unilateral adaptive directional microphones, with increased noise reduction from the back hemisphere (Powers & Beilin, 2013). The output of the beamformer is then generated by linearly adding the weighed local signals and the weighted contralateral signals; weighting is partly determined by head-shadowing effects. The algorithm also has specific properties which enhance the target signal and attenuate lateral interfering speech coming from outside the frontal target angular range (+/-10°). Kamkar-Parsi et al. (2014) report that this bilateral processing differs from other schemes of this type in that ear differences are maintained, the beamforming is fully adaptive, with dedicated integration of bilateral noise reduction.

Methods

The research we report was conducted at two different sites: the Hörzentrum Oldenburg, Germany (Site 1) and the University of Northern Colorado, Greeley, CO (Site 2). For some of the categories, where there were differences between sites, we report the data separately.

Participants

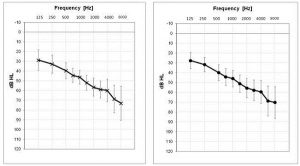

Site 1: All participants in the normal hearing group (n= 15) had audiometric thresholds of 25 dB or better for 250-4000 Hz. There were 3 males and 12 females, with a mean age of 64.5 (range = 47 to 75 years). The participants in the hearing-impaired group (n=29) all had symmetrical downward-sloping sensory/neural hearing losses (See Figure 1 for mean audiogram). They ranged in age from 34 to 78 with a mean age of 68.8; 13 males and 16 females.

Figure 1. Mean audiogram for the right ear (right panel) and left ear (left panel) for the hearing impaired participants (n=29) at Site 1.

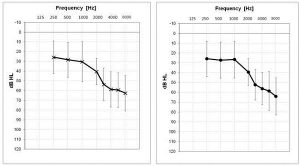

Figure 1. Mean audiogram for the right ear (right panel) and left ear (left panel) for the hearing impaired participants (n=29) at Site 1.Site 2: Prior to testing at Site 2, a power analysis using a 0.80 power value was conducted. For the 0.05 alpha level, the power analysis indicated 11 participants. The normal hearing group (n= 14) had audiometric thresholds of 25 dB or better for the frequencies 250-4000 Hz. The mean age was 58.1 (range = 52 to 68 years); 5 males and 9 females. The participants in the hearing-impaired group (n=14) all had symmetrical downward-sloping sensorineural hearing losses (See Figure 2 for mean audiogram). There were 7 males and 7 females, and they ranged in age from 60 to 72 with a mean age of 65.8.

Figure 2. Mean audiogram for the right ear (right panel) and left ear (left panel) for the hearing impaired participants (n=14) at Site 2.

Figure 2. Mean audiogram for the right ear (right panel) and left ear (left panel) for the hearing impaired participants (n=14) at Site 2.Hearing Aids

The hearing aids used in this research were the Siemens binax Pure 7bx receiver-in-the-canal (RIC) instruments. The gain and output of the hearing aids were programmed for each individual based on their hearing thresholds, according to the manufacturer’s proprietary fitting algorithm (referred to as “binax fit”). For experimental testing, the hearing aids were programmed to either omnidirectional, or with the frontal beamforming algorithm activated, termed Narrow Directionality in the software. All other special features such as digital noise reduction and feedback suppression were activated and set to the manufacturer’s default settings. All feature settings, other than the Narrow Directionality, were set identically for the omnidirectional and beamforming testing. The hearing aids were coupled to the ear using the standard Siemens closed-dome RIC fitting tips.

Speech Material

Site 1: The target speech material used at Site 1 was the sentences of the Oldenburger Satztest, commonly referred to as the OLSA (Kollmeier & Wesselkamp, 1997). All sentences in this material follow the structure: subject-verb-number-adjective-object, (e.g., Robert buys five red flowers), spoken in German. The test sentences were created by a random combination of 50 words. The background competing signal also was the OLSA sentences, presented uncorrelated from seven different loudspeakers surrounding the listener, with the gaps between sentences removed. That is, while the target OLSA sentence was presented, the listener would also be presented seven other different OLSA sentences. Additionally, a speech babble was added to the competing material, presented at a level 15 dB below the sentences.

Site 2: The target speech material at this site was the sentences of the Hearing In Noise Test (HINT; Nilsson, Soli, & Sullivan, 1994). The HINT is composed of 250 sentences, which are categorized into 25 lists. The sentences for the HINT are adapted from Bamford-Kowal-Bench (BKB) sentences (Bench, Kowall, & Bamford, 1979), and are revised from the original British versions to equate length and content for American English. Consistent with the test conditions of Site 1, the competing signal also was the HINT sentences, with the gaps between sentences removed, presented uncorrelated from the seven loudspeakers surrounding the listener. Therefore, during experimental testing, while listening for the target HINT sentence, the participant also would be presented seven other random HINT sentences from the different locations. The same speech babble as used at Site 1 also was added to the competing HINT sentences, presented 15 dB below the sentence material.

Procedures

Testing at both sites was conducted in a standard audiometric test suite. The array for the presentation of the target and competing speech material consisted of eight loudspeakers (Genelec 8030B) surrounding the participant, equally spaced at 45° increments, starting at 0° (i.e., 45°, 90°, 135°, etc). The participant was seated 1.5 meter from all loud speakers in the center of the room, directly facing the speaker at 0°, which was used to present the target sentences. The competing signals were presented from the seven other loudspeakers. The competing signals were calibrated for 65 dBA for each individual speaker, resulting in 72 dBA at the position of the participant when all seven loudspeakers were activated. This signal remained constant at this level for all testing.

The test procedure was the same for both groups, except that the hearing-impaired group was fitted bilaterally with the hearing aids prior to the testing. For the hearing impaired group, the order of the aided omnidirectional and beamforming conditions was counterbalanced among subjects. The same OLSA and HINT lists were presented to both the normal hearing and hearing impaired groups at the respective sites. The competing noise was introduced, and the target sentences were presented adaptively according the standard test procedures of the OLSA and the HINT. For the OLSA, following a practice list, one list of 20 sentences was administered and scored. The testing was automated, and the step-size automatically was reduced as testing progressed; the threshold in noise signal-to-noise ratio (SNR) also was automatically calculated. For the HINT, following a practice list, two lists of ten sentences were presented for each participant, using a 2-dB adaptive approach. The conventional HINT scoring method for 20 items was applied to calculate reference threshold for sentences.

Results

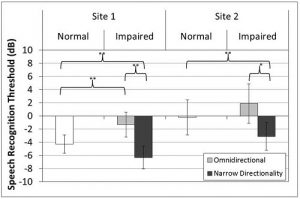

The mean speech recognition thresholds in noise (in dB; +/- 1 standard error) for both the OLSA (Site 1) and the HINT (Site 2) are displayed in Figure 3. These scores were analyzed using a series of t-tests. Family-wise error rate was controlled by dividing the significance level by the number of comparisons (3 at each performance site; Dunn, 1961). For all comparisons, significance level was set to p < 0.01.

Figure 3. Mean speech recognition thresholds (dB) for the two performance sites. White bars indicate performance for listeners with normal hearing. Grey bars indicate performance for listeners with hearing loss for the omnidirectional setting (light grey) and the Narrow Directionality setting (dark grey). Error bars represent ±1 standard deviation from the mean. * indicates a significant difference of p < 0.01; ** indicates a significant difference of p < 0.001.

Figure 3. Mean speech recognition thresholds (dB) for the two performance sites. White bars indicate performance for listeners with normal hearing. Grey bars indicate performance for listeners with hearing loss for the omnidirectional setting (light grey) and the Narrow Directionality setting (dark grey). Error bars represent ±1 standard deviation from the mean. * indicates a significant difference of p < 0.01; ** indicates a significant difference of p < 0.001.For Site 1, a paired-samples t-test revealed that speech recognition thresholds were significantly better with Narrow Directionality than with the omnidirectional setting (t(28) = -15.28, p< 0.001, Cohen’s d = -2.76). In addition, an independent sample t-test revealed that listeners with normal hearing performed better than those with hearing loss, when the hearing aids were in the omnidirectional setting (t(27) = 2.00, p< 0.001, Cohen’s d = 1.24). Conversely, when the hearing aids were in the Narrow Directionality setting, listeners with hearing loss outperformed those with normal hearing (t(27) = 3.25, p< 0.001, Cohen’s d = -1.64).

For Site 2, analysis revealed a similar pattern of results. Speech recognition thresholds were significantly better with Narrow Directionality than in the omnidirectional setting (t(13) = -8.72, p < 0.001, Cohen’s d = -1.82). When fitted with the Narrow Directionality setting, listeners with hearing loss outperformed those with normal hearing (t(27) = 3.25, p < 0.01, Cohen’s d = 1.25). Although there was a trend for listeners with normal hearing to outperform listeners with hearing loss in the omnidirectional setting, this trend did not reach statistical significance (t (27) = -2.00, p = 0.055, Cohen’s d = -0.77).

To explore the possible effects of degree of hearing loss and age on speech recognition thresholds, correlation analysis was performed. For both sites, results revealed no significant relationship between age or pure tone average (1000, 2000, and 4000 Hz) and performance in omnidirectional, performance with Narrow Directionality, and benefit from Narrow Directionality. In total, these results suggest that with the Narrow Directionality setting, listeners with hearing loss outperform their peers with normal hearing, whereas the opposite is true with the omnidirectional setting.

Discussion

The purpose of this research was to compare the speech recognition in noise performance of hearing-impaired listening using a new binaural beamforming algorithm to omnidirectional processing, and also to the performance of individuals with normal hearing. As expected, speech recognition was significantly better with the beamforming algorithm than with omnidirectional processing. This benefit was an identical average 5.0 dB SNR at both test sites. Statistical analysis also revealed that the aided performance with the beamforming algorithm not only equaled that of the normal hearing group, but was significantly better. The advantage was just over 2 dB for Site 1 and nearly 3 dB for Site 2 (Figure 3).

Note that the absolute scores for Site 1 are considerably better than those of the participants at Site 2. We would not necessarily expect the results of two different speech tests to agree, as different groups of participants were used, and the tests may not be equivalent. The sentence structure for the OLSA also is more predictable than that for the HINT, which could lead to better performance. Additionally, the scoring of the two tests is different. For the OLSA, credit is given for each word of a sentence that is repeated correctly. For the HINT, all key words of the sentence must be repeated correctly for the sentence to be scored correct, which could cause the speech reception threshold in noise to be worse than if individual word scoring was used. There is an English version of the OLSA and a German version of the HINT, but we are not aware of studies which have directly compared these two speech tests. For the present research, we were only interested in the relative differences between hearing loss groups.

As shown in Figure 3, the binaural beamforming algorithm provided a 5.0 dB SNR advantage at both sites over bilateral omnidirectional processing. It is somewhat difficult to compare this to previous research with directional processing, as the benefit obtained in any given research easily is influenced by experimental design (e.g., azimuth of competing signals, reverberation of room, speech material, type of background noise). Picou et al. (2014) evaluated speech recognition using instruments that employed a directional beamforming algorithm similar to that used in this research. However, they did not use an adaptive SNR, but rather different fixed SNRs with the Connected Speech Test, and therefore a direct comparison of benefit cannot be made. Appleton and Konig (2014) present data for bilateral beamforming instruments using a similar test paradigm and an adaptive speech test (OLSA speech test, competing cafeteria noise presented from 11 surrounding speakers). They report the benefit for bilateral beamforming (compared to omnidirectional) for both a static and adaptive setting to be 3.6 to 3.8 dB SNR.

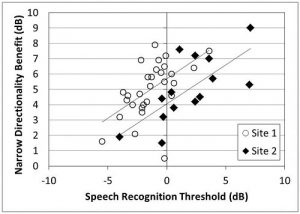

For both sites, there was no significant relationship between age or hearing loss and performance in omnidirectional, performance with Narrow Directionality, and benefit from Narrow Directionality. One other relationship to consider is the omnidirectional performance compared to the Narrow Directionality benefit. That is, did individuals who did poorer or better in the omnidirectional mode obtain more or less benefit when using the beamforming algorithm? There is research to suggest that factors such as working memory can impact benefit derived from different signal processing for speech recognition in background noise (Ng, Ruder, Lunner, & Syskind, 2013). To further investigate the relationship between performance and benefit, a partial correlation analysis was performed between performance in the omnidirectional setting and benefit from the Narrow Directionality setting (calculated as SRT in the omnidirectional setting minus SRT in the Narrow Directionality setting; positive scores indicate more benefit). This analysis was conducted by controlling for age and pure tone average (1000, 2000, and 4000 Hz). Results from both sites, as illustrated in Figure 4, indicated a significant correlation between the speech recognition threshold in noise in the omnidirectional mode and benefit from the Narrow Directionality setting (Site 1: r (39) = 0.50, p < 0.01; Site 2: r(10) =0.72 , p <0.01 ). A simple correlation analysis was also performed between performance and benefit without controlling for age or pure-tone average. This analysis also revealed a statistically significant relationship at both sites (Site 1: r (29) = 0.55, p < 0.01; Site 2: r(14) = 0.71, p < 0.05).

Figure 4. Relationship between speech recognition threshold in the omnidirectional setting (dB) and benefit from the Narrow Directionality setting (benefit=SRT in omnidirectional minus SRT in Narrow Directionality). Open circles indicate data from Site 1 (p<0.01) and filled diamonds represent data from Site 2 (p<0.01).

Figure 4. Relationship between speech recognition threshold in the omnidirectional setting (dB) and benefit from the Narrow Directionality setting (benefit=SRT in omnidirectional minus SRT in Narrow Directionality). Open circles indicate data from Site 1 (p<0.01) and filled diamonds represent data from Site 2 (p<0.01).These significant performance/benefit results suggest that listeners who tend to have the most difficulty understanding speech in background noise will derive the most benefit from the Narrow Directionality processing (see regression lines in Figure 4). This finding is consistent with the work of Ricketts and Mueller (2000). We speculate that this relationship is somewhat related to the competing noise signal. In the present study, the participants experienced both informational and energetic spatially-separated masking effects. Hornsby et al. (2006) suggest that for a listening condition of this type, increasing audibility using omnidirectional processing results in only limited improvements in speech understanding.

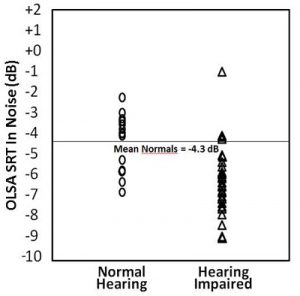

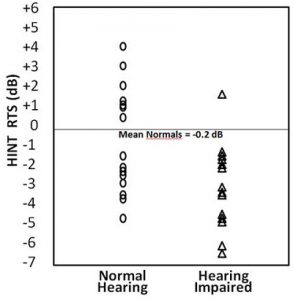

Regarding the advantage of binaural beamforming compared to individuals with normal hearing, while the data were significant, for clinical applications it is also important to consider effect size. As reported, the Cohen’s d for Site 1 was -1.64 and for Site 2 it was -0.77. These values are considered a “large effect” (Cohen, 1988). To illustrate individual differences in performance between the two groups, we have plotted the individual for both test sites (Figure 5, Figure 6). Observe for Site 1 (Figure 5), the OLSA scores for 25 of the 29 hearing-impaired participants were better than the mean performance for the normal hearing group. Similarly, for Site 2 (Figure 6), all but one of the 14 HINT scores for the hearing-impaired group were better than the mean of the normal hearing group.

Figure 5. The individual distribution of the speech recognition threshold (SRT) in noise for the Oldenburg Satztest (OLSA) for the participants at Site 1. The mean performance for the normal hearing group is shown as a reference.

Figure 5. The individual distribution of the speech recognition threshold (SRT) in noise for the Oldenburg Satztest (OLSA) for the participants at Site 1. The mean performance for the normal hearing group is shown as a reference. Figure 6. The individual distribution of the reference threshold for sentences (RTS) in noise for the Hearing In Noise Test (HINT) for the participants at Site 2. The mean performance for the normal hearing group is shown as a reference.

Figure 6. The individual distribution of the reference threshold for sentences (RTS) in noise for the Hearing In Noise Test (HINT) for the participants at Site 2. The mean performance for the normal hearing group is shown as a reference.In summary, with clinical testing at two different sites, for these two groups of hearing-impaired individuals, we have shown that when they are fitted bilaterally with binaural beamforming hearing aids, their performance for speech recognition in noise is significantly better than with omnidirectional processing, and more importantly, significantly better than that of a control group with normal hearing. The participants in this study had mild-to-moderate hearing impairment, and we recognize that this same finding may not be present for more severe hearing losses. Moreover, we also suggest further study with different levels of reverberation, and using different types of competing noise stimuli.

Footnote

1. In the audiologic hearing aid literature, there is a clear differentiation between the terms bilateral and binaural. We recognize that from a hearing aid fitting standpoint, the beamforming process for these instruments would best be described as bilateral, not binaural. However, in the signal processing literature, this type of beamforming consistently has been referred to as binaural. In fact, in this body of literature, the term bilateral beamforming sometimes has been used to describe two unilateral beamformers. In this paper, therefore, we use the term binaural beamforming, to emphasize that the process described here involves wireless audio data sharing between the two instruments.

References

Appleton, J., & Konig, G. (2014). Improvement in speech intelligibility and subjective benefit with binaural beamformer technology. Hearing Review, 21(10), 40-42.

Bench, J., Kowal, A., Bamford, J. (1979). The BKB (Bamford-Kowal-Bench) sentence lists for partially-hearing children. British Journal of Audiology, 13(3), 108-112.

Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences (second ed.). Mahwah, NJ: Lawrence Erlbaum Associates.

Dunn, O. J. (1961). Multiple comparisons among means. Journal of the American Statistical Association, 56(293), 52-64.

Hornsby, B. W., Ricketts, T. A., Johnson, E. E. (2006). The effects of speech and speechlike maskers on unaided and aided speech recognition in persons with hearing loss. Journal of the American Academy of Audiology, 17, 432-447.

Kamkar-Parsi, H., Fischer, E., Aubreville, M. (2014). New binaural strategies for enhanced hearing. Hearing Review, 21(10), 42-45.

Kollmeier, B., & Wesselkamp, M. (1997). Development and evaluation of a German sentence test for objective and subjective speech intelligibility assessment. Journal of the Acoustical Society of America, 102(4), 2412-2421.

Kreikemeier, S., Margolf-Hackl, S., Raether, J., Fichtl, E., & Kiessling, J. (2013). Comparison of different directional microphone technologies for moderate-to-severe hearing loss.Hearing Review, 20(11), 44-45.

Kuk, F., & Keenan, D. (2012). Efficacy of a reverse cardioid directional microphone. Journal of the American Academy of Audiology, 23(1), 64-73. doi: 10.3766/jaaa.23.1.7.

Mueller, H. G., Weber, J., & Bellanova, M. (2011). Clinical evaluation of a new hearing aid anti-cardioid directivity pattern. International Journal of Audiology, 50(4), 249-254. doi: 10.3109/14992027.2010.547992.

Mueller, H. G. (1981). Directional microphone hearing aids: A 10-year report. Hearing Instruments, 32(11), 18-20, 66.

Ng, E., Ruder, M., Lunner, T., & Syskind, M. (2013). Effects of noise and working memory capacity on memory processing of speech for hearing-aid users. International Journal Audiology, 52(7), 433-441. doi: 10.3109/14992027.2013.776181.

Nilsson, M., Soli, S. D., & Sullivan, J. A. (1994). Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. Journal of the Acoustical Society of America, 95(2), 1085-1099.

Picou, E., Aspell, E., & Ricketts, T. (2014). Potential benefits and limitation of three types of directional processing in hearing aids. Ear and Hearing, 35(3), 339-352. doi: 10.1097/AUD.0000000000000004.

Powers, T., & Beilin, J. (2013). True advances in hearing aid technology: What are they and where’s the proof? Hearing Review, 20(1), 32.

Ricketts, T., & Dhar, S. (1999). Comparison of performance across three directional hearing aids. Journal of the American Academy of Audiology, 10(4), 180-189.

Ricketts, T., Mueller, H. G. (1999). Making sense of directional microphone hearing aids. American Journal of Audiology, 8(2), 117-127.

Ricketts, T. A., Hornsby, B. W., & Johnson, E. E. (2005). Adaptive directional benefit in the near field: Competing sound angle and level effects. Seminars in Hearing, 26(2), 56-69.

Valente, M., Fabry, D., & Potts, L. (1995). Recognition of speech in noise with hearing aids using dual microphones. Journal of the American Academy Audiology, 6(6), 440-449.

Wu, Y.H., Stangl, E., Bentler, R.A., & Stanziola, R. W. (2013). The effect of hearing aid technologies on listening in an automobile. Journal of the American Academy of Audiology, 24(6), 474-485. doi: 10.3766/ja